Extracting relevant information from unstructured, semi-structured, and structured document images is a common task in many commercial products. There are many information extraction techniques for the business document. Let’s get acquainted with the principles of information extraction from documents.

Author: Goran Sukovic, PhD in Mathematics, Faculty of Natural Sciences and Mathematics, University of Montenegro

For many industries such as financial companies or medical institutes, documents are a primary tool for record-keeping, communication, collaboration, and transactions. Examples of such documents are tax forms, medical records, invoices, customer emails, purchase orders, support tickets, and product reviews. A huge amount of information is locked in unstructured or semi-structured documents.

Manually processing this data is a costly and time-consuming task. Enabling automation of the business process for these documents usually requires complex processes. We have to deal with heterogeneous types of incoming documents in a manner at close to real-time. The number of documents might be huge, so we are faced with a large-scale problem.

Numerous tasks are embedded in the automation process. The most common tasks are document classification, multi-page document treatment, document understanding, routing, and information extraction. For now, we will focus on the information extraction problem.

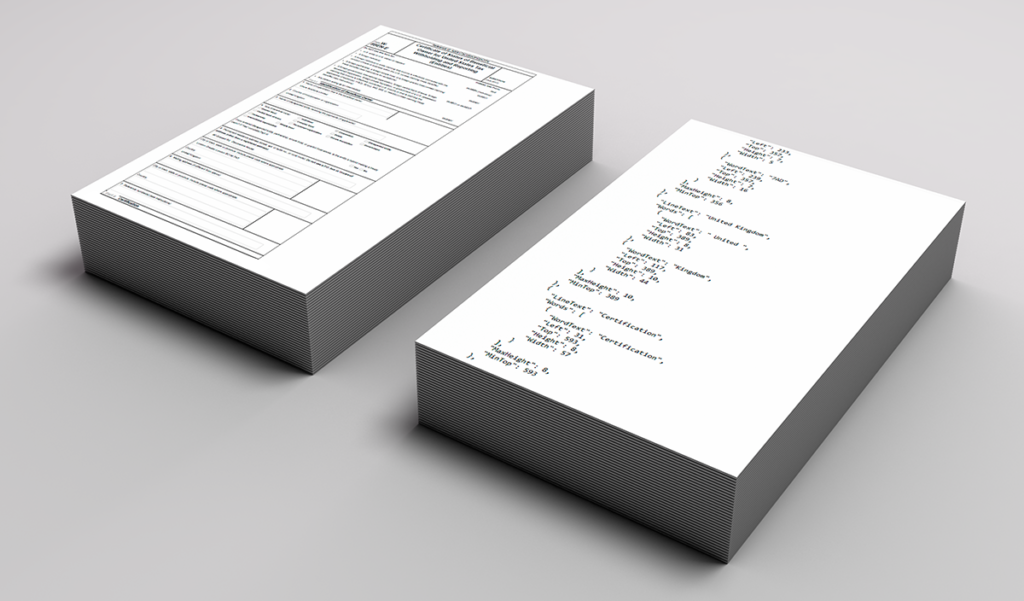

Information extraction can be defined as the task of extracting relevant information from unstructured, semi-structured and structured document images. For example, in a case of processing incoming invoices, we will be able to extract the relevant fields such as the invoice number, the issuer’s name, and address, the date, the total amount, etc. You can find such services in many commercial products. Most information extraction systems are built on knowledge coming from a database of documents already processed by the system.

Bird’s-eye view of techniques for field extraction from the business documents

Approaches to information extraction fall into two broad categories:

– patterns and rules

– token classification

An intuitive approach is to try to use patterns found in unstructured data to extract the information.

Rule-based systems generally assume that one type of document issued by the same supplier follows the same layout or template. In broad terms, they work by letting the user define a set of rules for each supplier which are either hand-written or induced from broad classes of patterns by labeled examples. Rules are typically based on keywords, absolute and relative positions, and regular expressions. If we wish to extract a date, e.g., “2020-02-23”, we can construct a regular expression which will match strings with the following structure: four digits, dash, 2 digits, dash, and 2 digits. All ISO 8601 formatted date strings will be captured by an expression, but it will also match “6789-89-77”, and it won’t match “Feb. 24, 2020”. More patterns could be created to cover all the various ways of writing dates. For instance, a rule might be the following: “The word next to the word Date represents the date.” or “The first word to the right of the word total is the total amount.”.

The main strength of rule-based information extraction systems is that they are interpretable, flexible, and easily modified [Chiticariu et al., 2013], [Berg, 2019]. Rules can be understood and modified by users, and system errors can be easily traced back to rules. Patterns and rules for a new task can be easily created based on predefined classes of patterns. The main drawback is the need to manually create and adjust the rules. Unfortunately, the systems are heuristic, i.e., there’s no guarantee that the decisions are optimal in any sense [Chiticariu et al., 2013]. There is also a risk that any minor spelling errors or formatting changes can throw rule off.

The other major approach to information extraction is token classification. The idea is to use supervised machine learning to classify which tokens to extract. There are two steps in this process: feature representation of tokens, and choosing classifier.

For the performance of any supervised learning classifier feature, representation of a token is critical. Typical features include one-hot encoding the token or using a learned vector embedding [Mikolov et al., 2013]. For classification, we can use any of the usual supervised learning techniques: logistic regression, decision trees, Support Vector Machines (SVM), Convolutional Neural Networks (CNN), Recurrent Neural Networks, or Conditional Random Fields.

The main advantages of this approach are [Berg, 2019]:

- the supervised learning algorithm can find complex patterns in the features that a human might not be able to define

- the discovered patterns are learned from the training data, which means they are robust against common noise present in the training data, e.g. common misspellings, formatting differences, etc.

The main drawback of this approach is the requirement that each token has to be labeled. Furthermore, the classifier is not transparent; i.e., often it is hard to understand how it works.

This post presents a bird’s-eye view of techniques for field extraction from the business documents. The following posts will give a deeper understanding of the aforementioned tools and algorithms, especially in the area of information extraction from unstructured and semi-structured documents.

Literature

1 Palm, Rasmus Berg – End-to-end information extraction from business documents, PhD Thesis, 2019.

2 Laura Chiticariu, Yunyao Li, and Frederick R Reiss. Rule-based information extraction is dead! Long live rule-based information extraction systems! In Proceedings of the 2013 conference on empirical methods in natural language processing, pages 827–832, 2013.

3 Tomas Mikolov, Ilya Sutskever, Kai Chen, Greg S Corrado, and Jeff Dean. Distributed representations of words and phrases and their compositionality. In Advances in neural information processing systems, pages 3111–3119, 2013.